diff --git a/CHANGELOG.md b/CHANGELOG.md

index b75b8262..81930604 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -2,6 +2,13 @@

+## v0.37.1 (2021-08-06)

+### Fix

+* TT-302 Fix URLLIB3 dependencies vulnerabilities ([#313](https://github.com/ioet/time-tracker-backend/issues/313)) ([`f7aba96`](https://github.com/ioet/time-tracker-backend/commit/f7aba96802a629d2829fc09606c67a07364c3016))

+

+### Documentation

+* TT-301 Update readme documentation and add Time Tracker CLI docs ([#314](https://github.com/ioet/time-tracker-backend/issues/314)) ([`58dbc15`](https://github.com/ioet/time-tracker-backend/commit/58dbc1588576d1603162e5d29780b315f5f784a5))

+

## v0.37.0 (2021-07-26)

### Feature

* TT-293 Create Script to generate data in the Database ([#310](https://github.com/ioet/time-tracker-backend/issues/310)) ([`50f8d46`](https://github.com/ioet/time-tracker-backend/commit/50f8d468d77835a6f90b958d4642d338f36d5f37))

diff --git a/README.md b/README.md

index 63029102..25bbe017 100644

--- a/README.md

+++ b/README.md

@@ -6,78 +6,202 @@ This is the mono-repository for the backend services and their common codebase

## Getting started

-Follow the following instructions to get the project ready to use ASAP.

+Follow the next instructions to get the project ready to use ASAP.

-### Requirements

+Currently, there are two ways to run the project, the production mode using a virtual environment and installing all the necessary libraries

+there and the other way is using the development mode with Docker and docker-compose. It is recommended to use the development mode and in special cases the production mode.

-Be sure you have installed in your system

+## Requirements:

+

+For both modes it is necessary to have the following requirements installed:

- [Python version 3](https://www.python.org/download/releases/3.0/) (recommended 3.8 or less) in your path. It will install

automatically [pip](https://pip.pypa.io/en/stable/) as well.

-- A virtual environment, namely [venv](https://docs.python.org/3/library/venv.html).

+- A virtual environment, namely [.venv](https://docs.python.org/3/library/venv.html).

- Optionally for running Azure functions locally: [Azure functions core tool](https://docs.microsoft.com/en-us/azure/azure-functions/functions-run-local?tabs=macos%2Ccsharp%2Cbash).

-### Setup

+## Settings for each mode

+

+Before proceeding to the configuration for each of the modes,

+it is important to perform the following step regardless of the mode to be used.

+

+### Create a virtual environment

-- Create and activate the environment,

+Execute the next command at the root of the project:

- In Windows:

+```shell

+python -m venv .venv

+```

+

+> **Note:** We can replace python for python3 or python3.8 according to the version you have installed,

+> but do not forget the initial requirements.

- ```

- #Create virtual enviroment

- python -m venv .venv

+**Activate the environment**

- #Execute virtual enviroment

- .venv\Scripts\activate.bat

- ```

+Windows:

+```shell

+.venv\Scripts\activate.bat

+```

- In Unix based operative systems:

+In Unix based operative systems:

- ```

- #Create virtual enviroment

- virtualenv .venv

+```shell

+source .venv/bin/activate

+```

- #Execute virtual enviroment

- source .venv/bin/activate

- ```

+### Setup for each mode

-**Note:** If you're a linux user you will need to install an additional dependency to have it working.

+The configuration required for each of the modes is as follows:

-Type in the terminal the following command to install the required dependency to have pyodbc working locally:

+

+ Development Mode

+

+### Requirements:

-```sh

-sudo apt-get install unixodbc-dev

+In addition to the initial requirements, it is necessary to have the following requirements installed:

+

+- Docker

+

+ You can follow the instructions below to install on each of the following operating systems:

+ - [**Mac**](https://docs.docker.com/docker-for-mac/install/)

+ - [**Linux**](https://docs.docker.com/engine/install/)

+ - [**Windows**](https://docs.docker.com/docker-for-windows/install/)

+

+- Docker Compose

+

+ To install Docker Compose, please choose the operating system you use and follow the steps [here](https://docs.docker.com/compose/install/).

+

+### Setup

+

+Once installed Docker and Docker Compose we must create a `.env` file in the root of our project where we will put the following environment variables.

+

+```shell

+export MS_AUTHORITY=XXXX

+export MS_CLIENT_ID=XXXX

+export MS_SCOPE=XXXX

+export MS_SECRET=yFo=XXXX

+export MS_ENDPOINT=XXXX

+export DATABASE_ACCOUNT_URI=XXXX

+export DATABASE_MASTER_KEY=XXXX

+export DATABASE_NAME=XXXX

+export FLASK_APP=XXXX

+export AZURE_APP_CONFIGURATION_CONNECTION_STRING=XXXX

+export FLASK_DEBUG=XXXX

+export REQUESTS_CA_BUNDLE=XXXX

```

+> **Please, contact the project development team for the content of the variables mentioned above.**

+

+### Run containers

+

+Once all the project configuration is done, we are going to execute the following command in the terminal, taking into account that we are inside the root folder of the project:

+

+```shell

+docker-compose up --build

+```

+

+This command will build all images with the necessary configurations for each one, also

+raises the cosmos emulator in combination with the backend, now you can open in the browser:

+

+- `http://127.0.0.1:5000/` open backend API.

+- `https://127.0.0.1:8081/_explorer/index.html` to open Cosmos DB emulator.

+

+> If you have already executed the command (`docker-compose up --build`) previously in this project,

+> it is not necessary to execute it again, instead it should be executed like this:

+> `docker-compose up`

+

+> It is also important to clarify that if packages or any extra configuration is added to the image's construction,

+> you need to run again `docker-compose up --build`, you can see more information about this flag [here](https://docs.docker.com/compose/reference/up/)

+

+### Development

+

+#### Generate Fake Data

+

+In order to generate fake data to test functionalities or correct errors,

+we have built a CLI, called 'Time Tracker CLI', which is in charge of generating

+the fake information inside the Cosmos emulator.

+

+To learn how this CLI works, you can see the instructions [here](https://github.com/ioet/time-tracker-backend/tree/master/cosmosdb_emulator)

+

+> It is important to clarify that Time Tracker CLI only works in development mode.

+

+### Test

+

+We are using [Pytest](https://docs.pytest.org/en/latest/index.html) for tests. The tests are located in the package

+`tests` and use the [conventions for python test discovery](https://docs.pytest.org/en/latest/goodpractices.html#test-discovery).

+

+> Remember to run any available test command we have to have the containers up (`docker-compose up`).

+

+This command run all tests:

+

+```shell

+./time-tracker.sh pytest -v

+```

+

+Run a single test:

+

+```shell

+./time-tracker.sh pytest -v -k name-test

+```

+

+#### Coverage

+

+To check the coverage of the tests execute:

+

+```shell

+./time-tracker.sh coverage run -m pytest -v

+```

+

+To get a report table:

+

+```shell

+./time-tracker.sh coverage report

+```

+

+To get a full report in html:

+

+```shell

+./time-tracker.sh coverage html

+```

+Then check in the [htmlcov/index.html](./htmlcov/index.html) to see it.

+

+If you want that previously collected coverage data is erased, you can execute:

+

+```shell

+./time-tracker.sh coverage erase

+```

+

+

-- Install the requirements:

+

- ```

- python3 -m pip install -r requirements//.txt

- ```

+

+ Production Mode

- If you use Windows, you will use this comand:

+### Setup

+

+#### Install the requirements:

+

+```

+python3 -m pip install -r requirements//.txt

+```

- ```

- python -m pip install -r requirements//.txt

- ```

+If you use Windows, you will use this command:

- Where `` is one of the executable app namespace, e.g. `time_tracker_api` or `time_tracker_events` (**Note:** Currently, only `time_tracker_api` is used.). The `stage` can be

+```

+python -m pip install -r requirements//.txt

+```

- - `dev`: Used for working locally

- - `prod`: For anything deployed

+Where `` is one of the executable app namespace, e.g. `time_tracker_api` or `time_tracker_events` (**Note:** Currently, only `time_tracker_api` is used.). The `stage` can be

-Remember to do it with Python 3.

+- `dev`: Used for working locally

+- `prod`: For anything deployed

Bear in mind that the requirements for `time_tracker_events`, must be located on its local requirements.txt, by

[convention](https://docs.microsoft.com/en-us/azure/azure-functions/functions-reference-python#folder-structure).

-- Run `pre-commit install -t pre-commit -t commit-msg`. For more details, see section Development > Git hooks.

-

### Set environment variables

-Set environment variables with the content pinned in our slack channel #time-tracker-developer:

-

-When you use Bash or GitBash you should use:

+When you use Bash or GitBash you should create a .env file and add the next variables:

```

export MS_AUTHORITY=XXX

@@ -93,7 +217,7 @@ export AZURE_APP_CONFIGURATION_CONNECTION_STRING=XXX

export FLASK_DEBUG=True

```

-If you use PowerShell, you should use:

+If you use PowerShell, you should create a .env.bat file and add the next variables:

```

$env:MS_AUTHORITY="XXX"

@@ -109,7 +233,7 @@ $env:AZURE_APP_CONFIGURATION_CONNECTION_STRING="XXX"

$env:FLASK_DEBUG="True"

```

-If you use Command Prompt, you should use:

+If you use Command Prompt, you should create a .env.ps1 file and add the next variables:

```

set "MS_AUTHORITY=XXX"

@@ -125,21 +249,114 @@ set "AZURE_APP_CONFIGURATION_CONNECTION_STRING=XXX"

set "FLASK_DEBUG=True"

```

-**Note:** You can create .env (Bash, GitBash), .env.bat (Command Prompt), .env.ps1 (PowerShell) files with environment variables and run them in the corresponding console.

-

-Important: You should set the environment variables each time the application is run.

+> **Important:** Ask the development team for the values of the environment variables, also

+> you should set the environment variables each time the application is run.

-### How to use it

+### Run application

- Start the app:

- ```

- flask run

- ```

+```shell

+flask run

+```

- Open `http://127.0.0.1:5000/` in a browser. You will find in the presented UI

a link to the swagger.json with the definition of the api.

+### Test

+

+We are using [Pytest](https://docs.pytest.org/en/latest/index.html) for tests. The tests are located in the package

+`tests` and use the [conventions for python test discovery](https://docs.pytest.org/en/latest/goodpractices.html#test-discovery).

+

+This command run all tests:

+

+```shell

+pytest -v

+```

+

+> **Note:** If you get the error "No module named azure.functions", execute the command `pip install azure-functions`:

+

+To run a single test:

+

+```shell

+pytest -v -k name-test

+```

+

+#### Coverage

+

+To check the coverage of the tests execute:

+

+```shell

+coverage run -m pytest -v

+```

+

+To get a report table:

+

+```shell

+coverage report

+```

+

+To get a full report in html:

+

+```shell

+coverage html

+```

+Then check in the [htmlcov/index.html](./htmlcov/index.html) to see it.

+

+If you want that previously collected coverage data is erased, you can execute:

+

+```shell

+coverage erase

+```

+

+

+

+

+

+### Git hooks

+We use [pre-commit](https://github.com/pre-commit/pre-commit) library to manage local git hooks.

+

+This library allows you to execute code right before the commit, for example:

+- Check if the commit contains the correct formatting.

+- Format modified files based on a Style Guide such as PEP 8, etc

+

+To install and use `pre-commit` in development mode we have to perform the next command:

+

+```shell

+python3 -m pip install pre-commit

+```

+

+Once `pre-commit` library is installed, we just need to run in our virtual environment:

+```shell

+pre-commit install -t pre-commit -t commit-msg

+```

+

+> Remember to execute these commands with the virtual environment active.

+

+For more details, see section Development > Git hooks.

+

+With this command the library will take configuration from `.pre-commit-config.yaml` and will set up the hooks by us.

+

+### Commit message style

+

+Use the following commit message style. e.g:

+

+```shell

+'feat: TT-123 Applying some changes'

+'fix: TT-321 Fixing something broken'

+'feat(config): TT-00 Fix something in config files'

+```

+

+The value `TT-###` refers to the Jira issue that is being solved. Use TT-00 if the commit does not refer to any issue.

+

+### Branch names format

+

+For example if your task in Jira is **TT-48 implement semantic versioning** your branch name is:

+

+```shell

+TT-48-implement-semantic-versioning

+```

+

### Handling Cosmos DB triggers for creating events with time_tracker_events

The project `time_tracker_events` is an Azure Function project. Its main responsibility is to respond to calls related to

@@ -227,120 +444,6 @@ If you require to deploy `time_tracker_events` from your local machine to Azure

func azure functionapp publish time-tracker-events --build local

```

-## Development

-

-### Git hooks

-

-We use [pre-commit](https://github.com/pre-commit/pre-commit) library to manage local git hooks, as developers we just need to run in our virtual environment:

-

-```

-pre-commit install -t pre-commit -t commit-msg

-```

-

-With this command the library will take configuration from `.pre-commit-config.yaml` and will set up the hooks by us.

-

-### Commit message style

-

-Use the following commit message style. e.g:

-

-```

-'feat: TT-123 Applying some changes'

-'fix: TT-321 Fixing something broken'

-'feat(config): TT-00 Fix something in config files'

-```

-

-The value `TT-###` refers to the Jira issue that is being solved. Use TT-00 if the commit does not refer to any issue.

-

-### Branch names format

-

-For example if your task in Jira is **TT-48 implement semantic versioning** your branch name is:

-

-```

- TT-48-implement-semantic-versioning

-```

-

-### Test

-

-We are using [Pytest](https://docs.pytest.org/en/latest/index.html) for tests. The tests are located in the package

-`tests` and use the [conventions for python test discovery](https://docs.pytest.org/en/latest/goodpractices.html#test-discovery).

-

-#### Integration tests

-

-The [integrations tests](https://en.wikipedia.org/wiki/Integration_testing) verifies that all the components of the app

-are working well together. These are the default tests we should run:

-

-This command run all tests:

-

-```dotenv

-python3 -m pytest -v --ignore=tests/commons/data_access_layer/azure/sql_repository_test.py

-```

-

-In windows

-

-```

-python -m pytest -v --ignore=tests/commons/data_access_layer/azure/sql_repository_test.py

-```

-

-**Note:** If you get the error "No module named azure.functions", execute the command:

-

-```

-pip install azure-functions

-```

-

-To run a sigle test:

-

-```

-pytest -v -k name-test

-```

-

-As you may have noticed we are ignoring the tests related with the repository.

-

-#### System tests

-

-In addition to the integration testing we might include tests to the data access layer in order to verify that the

-persisted data is being managed the right way, i.e. it actually works. We may classify the execution of all the existing

-tests as [system testing](https://en.wikipedia.org/wiki/System_testing):

-

-```dotenv

-python3 -m pytest -v

-```

-

-The database tests will be done in the table `tests` of the database specified by the variable `SQL_DATABASE_URI`. If this

-variable is not specified it will automatically connect to SQLite database in-memory. This will do, because we are using

-[SQL Alchemy](https://www.sqlalchemy.org/features.html) to be able connect to any SQL database maintaining the same

-codebase.

-

-The option `-v` shows which tests failed or succeeded. Have into account that you can also debug each test

-(test\_\* files) with the help of an IDE like PyCharm.

-

-#### Coverage

-

-To check the coverage of the tests execute

-

-```bash

- coverage run -m pytest -v

-```

-

-To get a report table

-

-```bash

- coverage report

-```

-

-To get a full report in html

-

-```bash

- coverage html

-```

-

-Then check in the [htmlcov/index.html](./htmlcov/index.html) to see it.

-

-If you want that previously collected coverage data is erased, you can execute:

-

-```

-coverage erase

-```

-

### CLI

There are available commands, aware of the API, that can be very helpful to you. You

@@ -374,22 +477,6 @@ standard commit message style.

[python-semantic-release](https://python-semantic-release.readthedocs.io/en/latest/commands.html#publish) for details of

underlying operations.

-## Run as docker container

-

-1. Build image

-

-```bash

-docker build -t time_tracker_api:local .

-```

-

-2. Run app

-

-```bash

-docker run -p 5000:5000 time_tracker_api:local

-```

-

-3. Visit `127.0.0.1:5000`

-

## Migrations

Looking for a DB-agnostic migration tool, the only choice I found was [migrate-anything](https://pypi.org/project/migrate-anything/).

@@ -438,13 +525,6 @@ They will be automatically run during the Continuous Deployment process.

Shared file with all the Feature Toggles we create, so we can have a history of them

[Feature Toggles dictionary](https://github.com/ioet/time-tracker-ui/wiki/Feature-Toggles-dictionary)

-## Support for docker-compose and cosmosdb emulator

-

-To run the dev enviroment in docker-compose:

-```bash

-docker-compose up

-```

-

## More information about the project

[Starting in Time Tracker](https://github.com/ioet/time-tracker-ui/wiki/Time-tracker)

diff --git a/commons/data_access_layer/cosmos_db.py b/commons/data_access_layer/cosmos_db.py

index 9cdf7f1c..3c8555d0 100644

--- a/commons/data_access_layer/cosmos_db.py

+++ b/commons/data_access_layer/cosmos_db.py

@@ -1,15 +1,15 @@

import dataclasses

import logging

-from typing import Callable, List

+from typing import Callable

import azure.cosmos.cosmos_client as cosmos_client

import azure.cosmos.exceptions as exceptions

-import flask

from azure.cosmos import ContainerProxy, PartitionKey

from flask import Flask

from werkzeug.exceptions import HTTPException

from commons.data_access_layer.database import CRUDDao, EventContext

+from utils.query_builder import CosmosDBQueryBuilder

class CosmosDBFacade:

@@ -124,55 +124,6 @@ def from_definition(

custom_cosmos_helper=custom_cosmos_helper,

)

- @staticmethod

- def create_sql_condition_for_visibility(

- visible_only: bool, container_name='c'

- ) -> str:

- if visible_only:

- # We are considering that `deleted == null` is not a choice

- return 'AND NOT IS_DEFINED(%s.deleted)' % container_name

- return ''

-

- @staticmethod

- def create_sql_active_condition(

- status_value: str, container_name='c'

- ) -> str:

- if status_value != None:

- not_defined_condition = ''

- condition_operand = ' AND '

- if status_value == 'active':

- not_defined_condition = (

- 'AND NOT IS_DEFINED({container_name}.status)'.format(

- container_name=container_name

- )

- )

- condition_operand = ' OR '

-

- defined_condition = '(IS_DEFINED({container_name}.status) \

- AND {container_name}.status = \'{status_value}\')'.format(

- container_name=container_name, status_value=status_value

- )

- return (

- not_defined_condition + condition_operand + defined_condition

- )

-

- return ''

-

- @staticmethod

- def create_sql_where_conditions(

- conditions: dict, container_name='c'

- ) -> str:

- where_conditions = []

- for k in conditions.keys():

- where_conditions.append(f'{container_name}.{k} = @{k}')

-

- if len(where_conditions) > 0:

- return "AND {where_conditions_clause}".format(

- where_conditions_clause=" AND ".join(where_conditions)

- )

- else:

- return ""

-

@staticmethod

def generate_params(conditions: dict) -> list:

result = []

@@ -206,16 +157,6 @@ def attach_context(data: dict, event_context: EventContext):

"session_id": event_context.session_id,

}

- @staticmethod

- def create_sql_date_range_filter(date_range: dict) -> str:

- if 'start_date' in date_range and 'end_date' in date_range:

- return """

- AND ((c.start_date BETWEEN @start_date AND @end_date) OR

- (c.end_date BETWEEN @start_date AND @end_date))

- """

- else:

- return ''

-

def create(

self, data: dict, event_context: EventContext, mapper: Callable = None

):

@@ -257,53 +198,38 @@ def find_all(

mapper: Callable = None,

):

conditions = conditions if conditions else {}

- partition_key_value = self.find_partition_key_value(event_context)

- max_count = self.get_page_size_or(max_count)

- params = [

- {"name": "@partition_key_value", "value": partition_key_value},

- {"name": "@offset", "value": offset},

- {"name": "@max_count", "value": max_count},

- ]

-

- status_value = None

- if conditions.get('status') != None:

- status_value = conditions.get('status')

+ max_count: int = self.get_page_size_or(max_count)

+

+ status_value = conditions.get('status')

+ if status_value:

conditions.pop('status')

date_range = date_range if date_range else {}

- date_range_params = (

- self.generate_params(date_range) if date_range else []

- )

- params.extend(self.generate_params(conditions))

- params.extend(date_range_params)

-

- query_str = """

- SELECT * FROM c

- WHERE c.{partition_key_attribute}=@partition_key_value

- {conditions_clause}

- {active_condition}

- {date_range_sql_condition}

- {visibility_condition}

- {order_clause}

- OFFSET @offset LIMIT @max_count

- """.format(

- partition_key_attribute=self.partition_key_attribute,

- visibility_condition=self.create_sql_condition_for_visibility(

- visible_only

- ),

- active_condition=self.create_sql_active_condition(status_value),

- conditions_clause=self.create_sql_where_conditions(conditions),

- date_range_sql_condition=self.create_sql_date_range_filter(

- date_range

- ),

- order_clause=self.create_sql_order_clause(),

+

+ query_builder = (

+ CosmosDBQueryBuilder()

+ .add_sql_where_equal_condition(conditions)

+ .add_sql_active_condition(status_value)

+ .add_sql_date_range_condition(date_range)

+ .add_sql_visibility_condition(visible_only)

+ .add_sql_limit_condition(max_count)

+ .add_sql_offset_condition(offset)

+ .build()

)

+ if len(self.order_fields) > 1:

+ attribute = self.order_fields[0]

+ order = self.order_fields[1]

+ query_builder.add_sql_order_by_condition(attribute, order)

+

+ query_str = query_builder.get_query()

+ params = query_builder.get_parameters()

+ partition_key_value = self.find_partition_key_value(event_context)

+

result = self.container.query_items(

query=query_str,

parameters=params,

partition_key=partition_key_value,

- max_item_count=max_count,

)

function_mapper = self.get_mapper_or_dict(mapper)

diff --git a/cosmosdb_emulator/README.md b/cosmosdb_emulator/README.md

new file mode 100644

index 00000000..20103ced

--- /dev/null

+++ b/cosmosdb_emulator/README.md

@@ -0,0 +1,90 @@

+# Time Tracker CLI

+

+Here you can find all the source code of the Time Tracker CLI.

+This is responsible for automatically generating fake data for the Cosmos emulator,

+in order to have information when testing new features or correcting bugs.

+

+> This feature is only available in development mode.

+

+## Prerequisites

+

+- Backend and cosmos emulator containers up.

+- Environment variables correctly configured

+

+### Environment Variables.

+

+The main environment variables that you need to take into account are the following:

+

+```shell

+export DATABASE_ACCOUNT_URI=https://azurecosmosemulator:8081

+export DATABASE_MASTER_KEY=C2y6yDjf5/R+ob0N8A7Cgv30VRDJIWEHLM+4QDU5DE2nQ9nDuVTqobD4b8mGGyPMbIZnqyMsEcaGQy67XIw/Jw==

+export DATABASE_NAME=time_tracker_testing_database

+```

+Verify that the variables are the same as those shown above.

+

+## How to use Time Tracker CLI?

+

+If we are in the project's root folder, we need to redirect to the folder `cosmosdb_emulator` and open a terminal.

+

+We have two main alternatives for running the CLI:

+

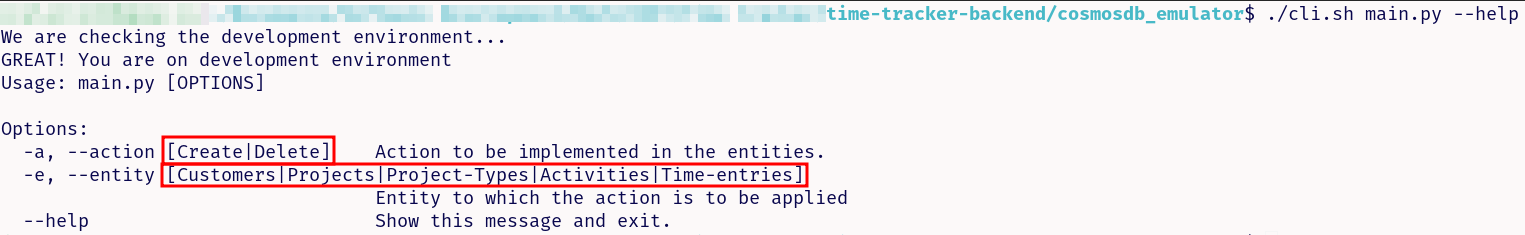

+### Execute CLI with flags.

+

+In order to see all the available flags for the CLI we are going to execute the following command:

+

+```shell

+./cli.sh main.py --help

+```

+

+When executing the above command, the following information will be displayed:

+

+

+

+Where you can see the actions we can perform on a given Entity:

+

+Currently, the CLI only allows the creation of Time-entries and allows the deletion of any entity.

+

+Available Actions:

+

+- Create: Allows creating new fake data about a certain entity.

+- Delete: Allows deleting information of an entity in the cosmos emulator.

+

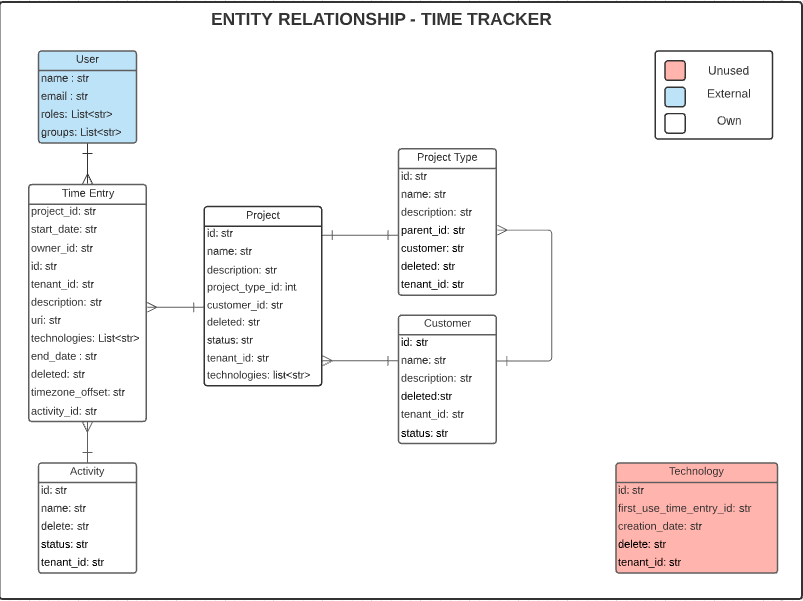

+> To delete information about a certain entity you have to take into account the relationship

+that this entity has with other entities, since this related information will also be eliminated,

+for this purpose the following diagram can be used as a reference:

+

+

+Available Entities:

+

+- Customers

+- Projects

+- Project-Types

+- Activities

+- Time-entries

+

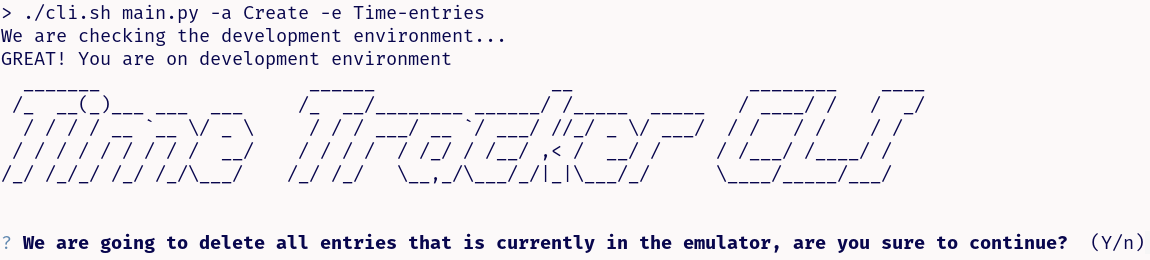

+Considering the actions that we can execute on the entities we can perform the following command

+to generate entries:

+```shell

+./cli.sh main.py -a Create -e Time-entries

+```

+

+The result of this command will be as follows:

+

+

+

+In this way we can continue with the generation of entities in an interactive way.

+

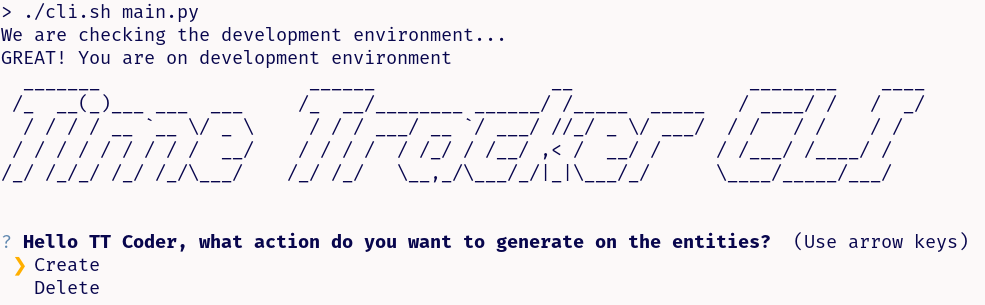

+### Execute CLI in an interactive way

+

+To run the CLI interactively, we need to execute the following command:

+

+```shell

+./cli.sh main.py

+```

+After executing the above command, the following will be displayed:

+

+

+

+This way we can interact dynamically with the CLI for the generation/deletion of entities.

+

+> Currently, for the generation of personal entries it is necessary to know the identifier of our user within Time Tracker.

\ No newline at end of file

diff --git a/requirements/azure_cosmos.txt b/requirements/azure_cosmos.txt

index f4d95df0..62ae1c17 100644

--- a/requirements/azure_cosmos.txt

+++ b/requirements/azure_cosmos.txt

@@ -9,7 +9,7 @@ certifi==2019.11.28

chardet==3.0.4

idna==2.8

six==1.13.0

-urllib3==1.25.8

+urllib3==1.26.5

virtualenv==16.7.9

virtualenv-clone==0.5.3

diff --git a/requirements/commons.txt b/requirements/commons.txt

index 9b5d811c..aef1f707 100644

--- a/requirements/commons.txt

+++ b/requirements/commons.txt

@@ -3,7 +3,7 @@

# For Common dependencies

# Handling requests

-requests==2.23.0

+requests==2.25.1

# To create sample content in tests and API documentation

Faker==4.0.2

diff --git a/requirements/time_tracker_api/dev.txt b/requirements/time_tracker_api/dev.txt

index 6d8a1599..302acb78 100644

--- a/requirements/time_tracker_api/dev.txt

+++ b/requirements/time_tracker_api/dev.txt

@@ -14,9 +14,6 @@ pytest-mock==2.0.0

# Coverage

coverage==4.5.1

-# Git hooks

-pre-commit==2.2.0

-

# CLI tools

PyInquirer==1.0.3

pyfiglet==0.7

diff --git a/tests/commons/data_access_layer/cosmos_db_test.py b/tests/commons/data_access_layer/cosmos_db_test.py

index c7a04eaf..07548988 100644

--- a/tests/commons/data_access_layer/cosmos_db_test.py

+++ b/tests/commons/data_access_layer/cosmos_db_test.py

@@ -660,28 +660,6 @@ def test_delete_permanently_with_valid_id_should_succeed(

assert e.status_code == 404

-def test_repository_create_sql_where_conditions_with_multiple_values(

- cosmos_db_repository: CosmosDBRepository,

-):

- result = cosmos_db_repository.create_sql_where_conditions(

- {'owner_id': 'mark', 'customer_id': 'me'}, "c"

- )

-

- assert result is not None

- assert (

- result == "AND c.owner_id = @owner_id AND c.customer_id = @customer_id"

- )

-

-

-def test_repository_create_sql_where_conditions_with_no_values(

- cosmos_db_repository: CosmosDBRepository,

-):

- result = cosmos_db_repository.create_sql_where_conditions({}, "c")

-

- assert result is not None

- assert result == ""

-

-

def test_repository_append_conditions_values(

cosmos_db_repository: CosmosDBRepository,

):

diff --git a/tests/time_tracker_api/activities/activities_model_test.py b/tests/time_tracker_api/activities/activities_model_test.py

index e9ea54b3..37c61e0f 100644

--- a/tests/time_tracker_api/activities/activities_model_test.py

+++ b/tests/time_tracker_api/activities/activities_model_test.py

@@ -1,16 +1,16 @@

-from unittest.mock import Mock, patch

-import pytest

-

+import copy

+from unittest.mock import Mock, patch, ANY

+from faker import Faker

from commons.data_access_layer.database import EventContext

from time_tracker_api.activities.activities_model import (

ActivityCosmosDBRepository,

ActivityCosmosDBModel,

+ create_dao,

)

+faker = Faker()

+

-@patch(

- 'time_tracker_api.activities.activities_model.ActivityCosmosDBRepository.create_sql_condition_for_visibility'

-)

@patch(

'time_tracker_api.activities.activities_model.ActivityCosmosDBRepository.find_partition_key_value'

)

@@ -20,10 +20,10 @@ def test_find_all_with_id_in_list(

activity_repository: ActivityCosmosDBRepository,

):

expected_item = {

- 'id': 'id1',

- 'name': 'testing',

- 'description': 'do some testing',

- 'tenant_id': 'tenantid1',

+ 'id': faker.uuid4(),

+ 'name': faker.name(),

+ 'description': faker.sentence(nb_words=4),

+ 'tenant_id': faker.uuid4(),

}

query_items_mock = Mock(return_value=[expected_item])

@@ -41,3 +41,25 @@ def test_find_all_with_id_in_list(

activity = result[0]

assert isinstance(activity, ActivityCosmosDBModel)

assert activity.__dict__ == expected_item

+

+

+def test_create_activity_should_add_active_status(

+ mocker,

+):

+ activity_payload = {

+ 'name': faker.name(),

+ 'description': faker.sentence(nb_words=5),

+ 'tenant_id': faker.uuid4(),

+ }

+ activity_repository_create_mock = mocker.patch.object(

+ ActivityCosmosDBRepository, 'create'

+ )

+

+ activity_dao = create_dao()

+ activity_dao.create(activity_payload)

+

+ expect_argument = copy.copy(activity_payload)

+ expect_argument['status'] = 'active'

+ activity_repository_create_mock.assert_called_with(

+ data=expect_argument, event_context=ANY

+ )

diff --git a/tests/time_tracker_api/time_entries/time_entries_namespace_test.py b/tests/time_tracker_api/time_entries/time_entries_namespace_test.py

index 8f22f45f..ce4a3a23 100644

--- a/tests/time_tracker_api/time_entries/time_entries_namespace_test.py

+++ b/tests/time_tracker_api/time_entries/time_entries_namespace_test.py

@@ -8,12 +8,8 @@

from pytest_mock import MockFixture, pytest

from utils.time import (

- get_current_year,

- get_current_month,

current_datetime,

current_datetime_str,

- get_date_range_of_month,

- datetime_str,

)

from utils import worked_time

from time_tracker_api.time_entries.time_entries_model import (

@@ -204,10 +200,6 @@ def test_get_time_entry_should_succeed_with_valid_id(

'time_tracker_api.time_entries.time_entries_dao.TimeEntriesCosmosDBDao.handle_date_filter_args',

Mock(),

)

-@patch(

- 'time_tracker_api.time_entries.time_entries_repository.TimeEntryCosmosDBRepository.create_sql_date_range_filter',

- Mock(),

-)

@patch(

'commons.data_access_layer.cosmos_db.CosmosDBRepository.generate_params',

Mock(),

@@ -232,7 +224,6 @@ def test_get_time_entries_by_type_of_user_when_is_user_tester(

expected_user_ids,

):

test_user_id = "id1"

- non_test_user_id = "id2"

te1 = TimeEntryCosmosDBModel(

{

"id": '1',

@@ -285,10 +276,6 @@ def test_get_time_entries_by_type_of_user_when_is_user_tester(

'time_tracker_api.time_entries.time_entries_dao.TimeEntriesCosmosDBDao.handle_date_filter_args',

Mock(),

)

-@patch(

- 'time_tracker_api.time_entries.time_entries_repository.TimeEntryCosmosDBRepository.create_sql_date_range_filter',

- Mock(),

-)

@patch(

'commons.data_access_layer.cosmos_db.CosmosDBRepository.generate_params',

Mock(),

@@ -313,7 +300,6 @@ def test_get_time_entries_by_type_of_user_when_is_not_user_tester(

expected_user_ids,

):

test_user_id = "id1"

- non_test_user_id = "id2"

te1 = TimeEntryCosmosDBModel(

{

"id": '1',

@@ -386,7 +372,6 @@ def test_get_time_entry_should_succeed_with_valid_id(

)

def test_get_time_entry_raise_http_exception(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

http_exception: HTTPException,

@@ -407,7 +392,6 @@ def test_get_time_entry_raise_http_exception(

def test_update_time_entry_calls_partial_update_with_incoming_payload(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

owner_id: str,

@@ -465,7 +449,6 @@ def test_update_time_entry_should_reject_bad_request(

def test_update_time_entry_raise_not_found(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

owner_id: str,

@@ -499,7 +482,6 @@ def test_update_time_entry_raise_not_found(

def test_delete_time_entry_calls_delete(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

time_entries_dao,

@@ -529,7 +511,6 @@ def test_delete_time_entry_calls_delete(

)

def test_delete_time_entry_raise_http_exception(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

http_exception: HTTPException,

@@ -554,7 +535,6 @@ def test_delete_time_entry_raise_http_exception(

def test_stop_time_entry_calls_partial_update(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

time_entries_dao,

@@ -581,7 +561,6 @@ def test_stop_time_entry_calls_partial_update(

def test_stop_time_entry_raise_unprocessable_entity(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

time_entries_dao,

@@ -611,7 +590,6 @@ def test_stop_time_entry_raise_unprocessable_entity(

def test_restart_time_entry_calls_partial_update(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

time_entries_dao,

@@ -638,7 +616,6 @@ def test_restart_time_entry_calls_partial_update(

def test_restart_time_entry_raise_unprocessable_entity(

client: FlaskClient,

- mocker: MockFixture,

valid_header: dict,

valid_id: str,

time_entries_dao,

diff --git a/tests/time_tracker_api/time_entries/time_entries_query_builder_test.py b/tests/time_tracker_api/time_entries/time_entries_query_builder_test.py

index fd23bd01..f3fa7efa 100644

--- a/tests/time_tracker_api/time_entries/time_entries_query_builder_test.py

+++ b/tests/time_tracker_api/time_entries/time_entries_query_builder_test.py

@@ -6,7 +6,7 @@

from utils.repository import remove_white_spaces

-def test_TimeEntryQueryBuilder_is_subclass_CosmosDBQueryBuilder():

+def test_time_entry_query_builder_should_be_subclass_of_cosmos_query_builder():

query_builder = CosmosDBQueryBuilder()

time_entries_query_builder = TimeEntryQueryBuilder()

@@ -15,50 +15,6 @@ def test_TimeEntryQueryBuilder_is_subclass_CosmosDBQueryBuilder():

)

-def test_add_sql_date_range_condition_should_update_where_list():

- start_date = "2021-03-19T05:07:00.000Z"

- end_date = "2021-03-25T10:00:00.000Z"

- time_entry_query_builder = (

- TimeEntryQueryBuilder().add_sql_date_range_condition(

- {

- "start_date": start_date,

- "end_date": end_date,

- }

- )

- )

- expected_params = [

- {"name": "@start_date", "value": start_date},

- {"name": "@end_date", "value": end_date},

- ]

- assert len(time_entry_query_builder.where_conditions) == 1

- assert len(time_entry_query_builder.parameters) == len(expected_params)

- assert time_entry_query_builder.get_parameters() == expected_params

-

-

-def test_build_with_add_sql_date_range_condition():

- time_entry_query_builder = (

- TimeEntryQueryBuilder()

- .add_sql_date_range_condition(

- {

- "start_date": "2021-04-19T05:00:00.000Z",

- "end_date": "2021-04-20T10:00:00.000Z",

- }

- )

- .build()

- )

-

- expected_query = """

- SELECT * FROM c

- WHERE ((c.start_date BETWEEN @start_date AND @end_date) OR

- (c.end_date BETWEEN @start_date AND @end_date))

- """

- query = time_entry_query_builder.get_query()

-

- assert remove_white_spaces(query) == remove_white_spaces(expected_query)

- assert len(time_entry_query_builder.where_conditions) == 1

- assert len(time_entry_query_builder.get_parameters()) == 2

-

-

def test_add_sql_interception_with_date_range_condition():

start_date = "2021-01-19T05:07:00.000Z"

end_date = "2021-01-25T10:00:00.000Z"

diff --git a/tests/utils/query_builder_test.py b/tests/utils/query_builder_test.py

index 742730db..dc66b4f1 100644

--- a/tests/utils/query_builder_test.py

+++ b/tests/utils/query_builder_test.py

@@ -331,3 +331,93 @@ def test_add_sql_not_in_condition(

)

assert len(query_builder.where_conditions) == len(expected_not_in_list)

assert query_builder.where_conditions == expected_not_in_list

+

+

+def test_add_sql_date_range_condition_should_update_where_list():

+ start_date = "2021-03-19T05:07:00.000Z"

+ end_date = "2021-03-25T10:00:00.000Z"

+ query_builder = CosmosDBQueryBuilder().add_sql_date_range_condition(

+ {

+ "start_date": start_date,

+ "end_date": end_date,

+ }

+ )

+ expected_params = [

+ {"name": "@start_date", "value": start_date},

+ {"name": "@end_date", "value": end_date},

+ ]

+ assert len(query_builder.where_conditions) == 1

+ assert len(query_builder.parameters) == len(expected_params)

+ assert query_builder.get_parameters() == expected_params

+

+

+def test_build_with_add_sql_date_range_condition():

+ query_builder = (

+ CosmosDBQueryBuilder()

+ .add_sql_date_range_condition(

+ {

+ "start_date": "2021-04-19T05:00:00.000Z",

+ "end_date": "2021-04-20T10:00:00.000Z",

+ }

+ )

+ .build()

+ )

+

+ expected_query = """

+ SELECT * FROM c

+ WHERE ((c.start_date BETWEEN @start_date AND @end_date) OR

+ (c.end_date BETWEEN @start_date AND @end_date))

+ """

+ query = query_builder.get_query()

+

+ assert remove_white_spaces(query) == remove_white_spaces(expected_query)

+ assert len(query_builder.where_conditions) == 1

+ assert len(query_builder.get_parameters()) == 2

+

+

+def test_add_sql_active_condition_should_update_where_conditions():

+ status_value = 'active'

+ expected_active_query = f"""

+ SELECT * FROM c

+ WHERE NOT IS_DEFINED(c.status) OR (IS_DEFINED(c.status) AND c.status = '{status_value}')

+ """

+ expected_condition = f"NOT IS_DEFINED(c.status) OR (IS_DEFINED(c.status) AND c.status = '{status_value}')"

+

+ query_builder = (

+ CosmosDBQueryBuilder()

+ .add_sql_active_condition(status_value=status_value)

+ .build()

+ )

+

+ active_query = query_builder.get_query()

+

+ assert remove_white_spaces(active_query) == remove_white_spaces(

+ expected_active_query

+ )

+ assert len(query_builder.where_conditions) == 1

+ assert query_builder.where_conditions[0] == expected_condition

+

+

+def test_add_sql_inactive_condition_should_update_where_conditions():

+ status_value = 'inactive'

+ expected_inactive_query = f"""

+ SELECT * FROM c

+ WHERE (IS_DEFINED(c.status) AND c.status = '{status_value}')

+ """

+ expected_condition = (

+ f"(IS_DEFINED(c.status) AND c.status = '{status_value}')"

+ )

+

+ query_builder = (

+ CosmosDBQueryBuilder()

+ .add_sql_active_condition(status_value=status_value)

+ .build()

+ )

+

+ inactive_query = query_builder.get_query()

+

+ assert remove_white_spaces(inactive_query) == remove_white_spaces(

+ expected_inactive_query

+ )

+ assert len(query_builder.where_conditions) == 1

+ assert query_builder.where_conditions[0] == expected_condition

diff --git a/time-tracker.sh b/time-tracker.sh

new file mode 100644

index 00000000..fe6b0068

--- /dev/null

+++ b/time-tracker.sh

@@ -0,0 +1,11 @@

+#!/bin/sh

+COMMAND=$@

+PYTHON_COMMAND="pip install azure-functions"

+API_CONTAINER_NAME="time-tracker-backend_api"

+

+execute(){

+ docker exec -ti $API_CONTAINER_NAME sh -c "$PYTHON_COMMAND"

+ docker exec -ti $API_CONTAINER_NAME sh -c "$COMMAND"

+}

+

+execute

\ No newline at end of file

diff --git a/time_tracker_api/activities/activities_model.py b/time_tracker_api/activities/activities_model.py

index 2a1de900..83f10fff 100644

--- a/time_tracker_api/activities/activities_model.py

+++ b/time_tracker_api/activities/activities_model.py

@@ -10,11 +10,7 @@

from time_tracker_api.database import CRUDDao, APICosmosDBDao

from typing import List, Callable

from commons.data_access_layer.database import EventContext

-from utils.repository import (

- convert_list_to_tuple_string,

- create_sql_in_condition,

-)

-from utils.query_builder import CosmosDBQueryBuilder, Order

+from utils.query_builder import CosmosDBQueryBuilder

class ActivityDao(CRUDDao):

@@ -150,6 +146,13 @@ def get_all(

)

return activities

+ def create(self, activity_payload: dict):

+ event_ctx = self.create_event_context('create')

+ activity_payload['status'] = 'active'

+ return self.repository.create(

+ data=activity_payload, event_context=event_ctx

+ )

+

def create_dao() -> ActivityDao:

repository = ActivityCosmosDBRepository()

diff --git a/time_tracker_api/time_entries/time_entries_query_builder.py b/time_tracker_api/time_entries/time_entries_query_builder.py

index 3147d43f..2417ac85 100644

--- a/time_tracker_api/time_entries/time_entries_query_builder.py

+++ b/time_tracker_api/time_entries/time_entries_query_builder.py

@@ -5,23 +5,6 @@ class TimeEntryQueryBuilder(CosmosDBQueryBuilder):

def __init__(self):

super(TimeEntryQueryBuilder, self).__init__()

- def add_sql_date_range_condition(self, date_range: tuple = None):

- if date_range and len(date_range) == 2:

- start_date = date_range['start_date']

- end_date = date_range['end_date']

- condition = """

- ((c.start_date BETWEEN @start_date AND @end_date) OR

- (c.end_date BETWEEN @start_date AND @end_date))

- """

- self.where_conditions.append(condition)

- self.parameters.extend(

- [

- {'name': '@start_date', 'value': start_date},

- {'name': '@end_date', 'value': end_date},

- ]

- )

- return self

-

def add_sql_interception_with_date_range_condition(

self, start_date, end_date

):

diff --git a/time_tracker_api/version.py b/time_tracker_api/version.py

index 8935b5b5..a4b38359 100644

--- a/time_tracker_api/version.py

+++ b/time_tracker_api/version.py

@@ -1 +1 @@

-__version__ = '0.37.0'

+__version__ = '0.37.1'

diff --git a/utils/query_builder.py b/utils/query_builder.py

index 2899aab4..b66f9ec1 100644

--- a/utils/query_builder.py

+++ b/utils/query_builder.py

@@ -34,6 +34,41 @@ def add_sql_in_condition(

self.where_conditions.append(f"c.{attribute} IN {ids_values}")

return self

+ def add_sql_active_condition(self, status_value: str):

+ if status_value:

+ not_defined_condition = ''

+ condition_operand = ''

+ if status_value == 'active':

+ not_defined_condition = 'NOT IS_DEFINED(c.status)'

+ condition_operand = ' OR '

+

+ defined_condition = (

+ f"(IS_DEFINED(c.status) AND c.status = '{status_value}')"

+ )

+ condition = (

+ not_defined_condition + condition_operand + defined_condition

+ )

+ self.where_conditions.append(condition)

+ return self

+

+ def add_sql_date_range_condition(self, date_range: dict = None):

+ if date_range:

+ start_date = date_range.get('start_date')

+ end_date = date_range.get('end_date')

+ if start_date and end_date:

+ condition = """

+ ((c.start_date BETWEEN @start_date AND @end_date) OR

+ (c.end_date BETWEEN @start_date AND @end_date))

+ """

+ self.where_conditions.append(condition)

+ self.parameters.extend(

+ [

+ {'name': '@start_date', 'value': start_date},

+ {'name': '@end_date', 'value': end_date},

+ ]

+ )

+ return self

+

def add_sql_where_equal_condition(self, data: dict = None):

if data:

for k, v in data.items():